Generative AI at SeaStar Quality Conference

The online streaming sessions at SeaStar on the morning of Oct. 18 were very interesting.

The Keynote: AI – Your Testing Frenemy for Enhanced Software Quality by Iryna Suprun from Microsoft was a great, detailed introduction into how testers can use AI.

Her medium account also includes numerous examples.

She went into some concrete details on prompt engineering:

- Task – mandatory: start with verb. Articulate the goal clearly.

- Context – user background, environment, how success looks like

- Exemplar

- Persona – important : who do you want ChatGPT (Bard, etc) to impersonate?

- Format and Tone – nice to have

After doing a live demo of simple code generation and testing and their issues, she also mentioned that her slide graphics were all AI generated, She used AI to suggest her talk title and abstract, but finally formulated her own. Below is her example prompt to create a short python script.

Copying Files from Source to Target Folder

Task:

Write a python script that copies all files from source folder, including files in subfolders in one folder and changes their extension to txt.

Engineered Prompt:

Create a Python script that recursively copies all files from a source folder, including files in subfolders, into a single destination folder while changing their file extensions to txt.

Imagine you have a source folder called “source folder with various files and subfolders containing more files. Your goal is to create a Python script that extracts all these files and their subfolders’ contents into a single destination folder while changing the file extensions to txt.

You are an experienced Python software engineer with a deep understanding of file manipulation and directory traversal. You have a strong grasp of Python programming and are comfortable with handling file operations and recursion.

Nikolay Advolodkin for Sauce Labs talked on ChatGPT-4 Unleashed: Revolutionizing Web Development from Idea to Deployment.

He outlined his experiments with ChatGPT3.5 and ChatGPT4 and examples of various code generation outputs: next.js website, Crypress and Playwright e2e tests, GitHub actions, Netlify, and Azure DeveOps. He described or illustrated many issues found.

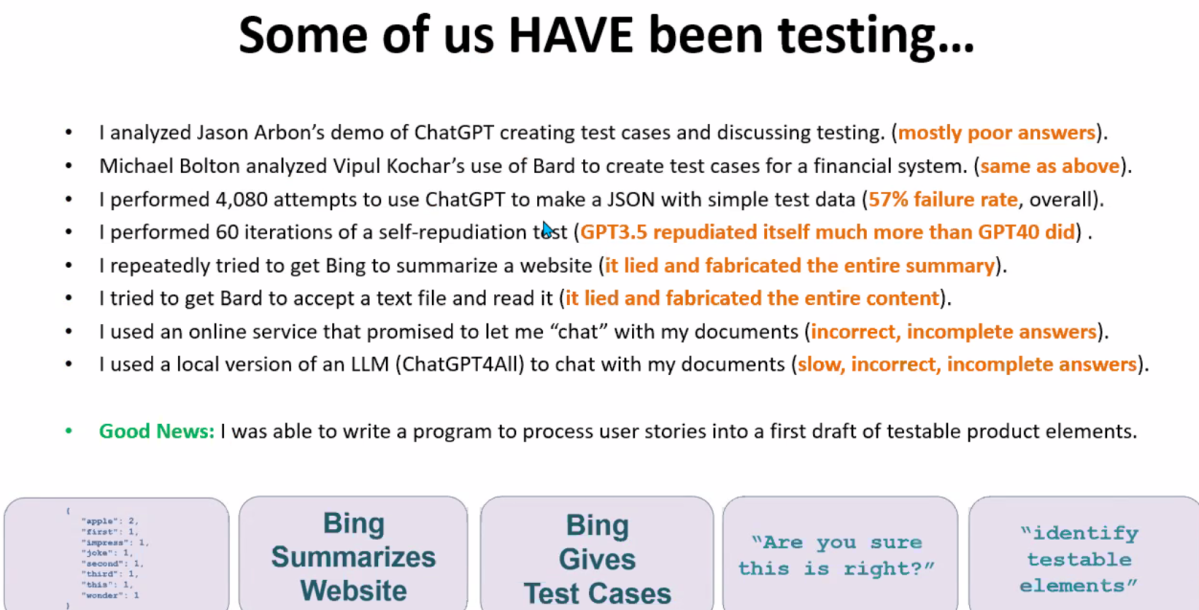

James Bach from Satisfice gave his talk on Let’s Think Critically About AI in Testing. James ran numerous tests and showed his results (also available on GitHub). AIs are not good at doing direct tasks like sorting medium sized (>10) lists. The data even showed the wide variation of reproducability from repeating the same prompt, even adjusting ChatGPT temperature to 0 (least variability) did not entirely remove variability (although it did significantly reduce it).

James also illustrated other well known issues with AI like halucenations, self-repudiation, etc.

Summary of issues:

For some details see ChatGPT Sucks at Being a Testing Expert

Finally there were extensive discussions during the

Panel Discussion – Unlocking Efficiency: Exploring ML Models for Software Testing

and “workshop” to Define Principles of Collaborating with AI without Compromising Quality.

One interesting observation made going back to my earlier post on testing AI generated code. While we typically don’t think we have to retest everything after a small code change by a human, all bets seems to be off and a full regression run may be needed for each change by an AI.

Generative AI is rapidly evolving and improving. I think there was general consensus that is can be helpful in assisting humans by generating ideas (or first drafts). Much less consensus how about solid, without human intervention, the output really is. A lot of discussion about open versus proprietary models and how to protect private data.

Scott Aziz, CTO of AgileAI Labs, Inc, a “Low code” app development platform, almost gave a product pitch in his talk Unlocking Agile Team Productivity: A Modern Approach.

His outline to Embracing Al in Agile: Next Steps

Research & Experiment

Develop an Actionable roadmap for Agile teams to begin integrating AI into their processes.

-

-

-

-

- Start with free tools.

- Push the limits with prompt architecture.

- Start training.

-

-

-

He claims output is almost predictive with sufficiently detailed prompts to get the prompts correct (contrast with Bach’s experiments).

Need very mature prompt (but gave no examples). Most Ais allow you to start the training process. Claims you can use for requirements enhancements (see screen shot below), or robust complete test cases.

Recommend Free Tools : ChatGPT4 and google Bard

-

Keep log of prompt attempts (James Bach ha logged 1,000s)

-

Try and provide guidance

-

See some basic results

-

Doc success and failures (James Bach notes many failures)

Why so little detail? Maybe because he mentioned their company has patents on training, models, etc.

I think you can still get the $20 pass for recordings: https://quality.seastarconf.com